Research Direction:

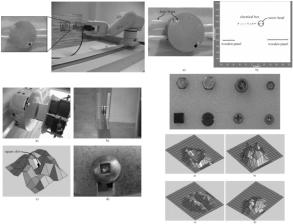

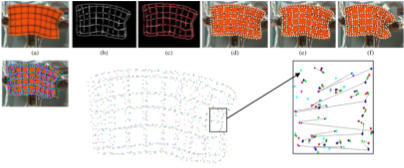

AI for Engineering Applications: Sensor systems, mission planning, robot grasping/manipulation, autonomous systems Drawing inspiration from the human vision-touch interaction that demonstrates the ability of vision in assisting tactile manipulation tasks, this project addresses the issue of 3D object recognition from tactile data whose acquisition is guided by visual information. An improved computational visual

tactile data whose acquisition is guided by visual information. An improved computational visual attention model is initially applied on images collected from multiple viewpoints over the surface of an

object to identify regions that attract visual attention. Information about color, intensity, orientation,

attention model is initially applied on images collected from multiple viewpoints over the surface of an

object to identify regions that attract visual attention. Information about color, intensity, orientation, symmetry, curvature, contrast and entropy are advantageously combined for this purpose. Interest

symmetry, curvature, contrast and entropy are advantageously combined for this purpose. Interest points are then extracted from these regions of interest using an innovative technique that takes into

consideration the best viewpoint of the object. As the movement and positioning of the tactile sensor

to probe the object surface at the identified interest points take generally a long time, the local data

points are then extracted from these regions of interest using an innovative technique that takes into

consideration the best viewpoint of the object. As the movement and positioning of the tactile sensor

to probe the object surface at the identified interest points take generally a long time, the local data acquisition is first simulated to choose the most promising approach to interpret it. To enable the

acquisition is first simulated to choose the most promising approach to interpret it. To enable the object recognition, the tactile images are analyzed with the help of various classifiers. A method

object recognition, the tactile images are analyzed with the help of various classifiers. A method based on similarity is employed to select the best candidate tactile images to train the classifier.

based on similarity is employed to select the best candidate tactile images to train the classifier. Among the tested algorithms, the best performance is achieved using the k-nearest neighbor

Among the tested algorithms, the best performance is achieved using the k-nearest neighbor classifier both for simulated data (87.89% for 4 objects and 75.82% for 6 objects) and real tactile

classifier both for simulated data (87.89% for 4 objects and 75.82% for 6 objects) and real tactile sensor data (72.25% for 4 objects and 70.47% for 6 objects).

Support:

Publications:

•

G. Rouhafzay and A.-M. Cretu, "An Application of Deep Learning to Tactile Data for Object Recognition under Visual Guidance", MDPI

sensor data (72.25% for 4 objects and 70.47% for 6 objects).

Support:

Publications:

•

G. Rouhafzay and A.-M. Cretu, "An Application of Deep Learning to Tactile Data for Object Recognition under Visual Guidance", MDPI Sensors, vol. 19, art. 1534, 2019, pp. 1-13, doi: 10.3390/s19071534, NEW.

•

G. Rouhafzay and A.-M. Cretu, "Object Recognition from Haptic Glance at Visually Salient Locations", IEEE Trans. Instrum. & Meas,

Mar. 2019, NEW.

•

G. Rouhafzay, and A.-M. Cretu, "A Visuo-Haptic Framework for Object Recognition Inspired from Human Tactile Perception", MDPI Int.

Electron. Conf. Sensors and Applications, Proceedings 4(47), 2019, pp. 1:7, doi:10.3390/ecsa-5-05754, NEW.

•

G. Rouhafzay and A.-M. Cretu, "A Virtual Tactile Sensor with Adjustable Precision and Size for Object Recognition", IEEE Int. Conf.

Sensors, vol. 19, art. 1534, 2019, pp. 1-13, doi: 10.3390/s19071534, NEW.

•

G. Rouhafzay and A.-M. Cretu, "Object Recognition from Haptic Glance at Visually Salient Locations", IEEE Trans. Instrum. & Meas,

Mar. 2019, NEW.

•

G. Rouhafzay, and A.-M. Cretu, "A Visuo-Haptic Framework for Object Recognition Inspired from Human Tactile Perception", MDPI Int.

Electron. Conf. Sensors and Applications, Proceedings 4(47), 2019, pp. 1:7, doi:10.3390/ecsa-5-05754, NEW.

•

G. Rouhafzay and A.-M. Cretu, "A Virtual Tactile Sensor with Adjustable Precision and Size for Object Recognition", IEEE Int. Conf. Computational Intelligence and Virtual Environments for Measurement Systems and Applications, Ottawa, Canada, 2018.

•

G. Rouhafzay, N. Pedneault and A.-M. Cretu, "A 3D Visual Attention Model to Guide Tactile Data Acquisition for Object Recognition",

Computational Intelligence and Virtual Environments for Measurement Systems and Applications, Ottawa, Canada, 2018.

•

G. Rouhafzay, N. Pedneault and A.-M. Cretu, "A 3D Visual Attention Model to Guide Tactile Data Acquisition for Object Recognition", accepted for publication, 4th Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2017, Sciforum El. Conf. Series, 2017.

•

N. Pedneault and A.-M. Cretu, "3D Object Recognition from Tactile Array Data Acquired at Salient Points", IEEE Int. Symp. Robotics

accepted for publication, 4th Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2017, Sciforum El. Conf. Series, 2017.

•

N. Pedneault and A.-M. Cretu, "3D Object Recognition from Tactile Array Data Acquired at Salient Points", IEEE Int. Symp. Robotics and Intelligent Sensors, pp. 150-156, Ottawa, Canada, 2017 (Best Paper Finalist Award).

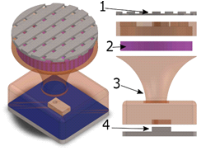

The ill-defined nature of tactile information turns tactile sensors into the last frontier to robots

that can handle everyday objects and interact with humans through contact. To overcome this

frontier many design aspects have to be considered: sensors placement,

and Intelligent Sensors, pp. 150-156, Ottawa, Canada, 2017 (Best Paper Finalist Award).

The ill-defined nature of tactile information turns tactile sensors into the last frontier to robots

that can handle everyday objects and interact with humans through contact. To overcome this

frontier many design aspects have to be considered: sensors placement, electronic/mechanical hardware, methods to access and acquire signals, calibration, and

electronic/mechanical hardware, methods to access and acquire signals, calibration, and algorithms to process and interpret sensing data in real time. This project aims at the design

and hardware implementation of a bio-inspired tactile module that comprises a 32 taxels

algorithms to process and interpret sensing data in real time. This project aims at the design

and hardware implementation of a bio-inspired tactile module that comprises a 32 taxels array and a 9 DOF MARG (Magnetic, Angular Rate, and Gravity) sensor, a flexible compliant

array and a 9 DOF MARG (Magnetic, Angular Rate, and Gravity) sensor, a flexible compliant structure and a deep pressure sensor.

Publications:

•

T. E. A. Oliveira, V. P. Fonseca, B.M.R. Lima, A.-M. Cretu and E. M. Petriu, "End-

Effector Approach Flexibilization in a Surface Exploration Task Using a Bioinspired Tactile Sensing Module", IEEE Int. Symp. Robotics

and Sensor Environments, Ottawa, pp. 177-182, 2019, NEW.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing Module for Surface Characterization", MDPI

Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, IEEE Sensors, vol.17, issue 11, pp. 3231-3243,

Apr. 2017.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing

Module", 3rd Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi:

10.3390/ecsa-3-E007

•

T.E.A. Oliveira, V.P. Fonseca, A.-M. Cretu, E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, UOttawa Graduate and Research

Day, University of Ottawa, Mar. 2016 (Research Poster Prize in Electrical Engineering, first place; IEEE Research Poster Prize, first

place).

Robots are expected to recognize the properties of objects in order to handle

structure and a deep pressure sensor.

Publications:

•

T. E. A. Oliveira, V. P. Fonseca, B.M.R. Lima, A.-M. Cretu and E. M. Petriu, "End-

Effector Approach Flexibilization in a Surface Exploration Task Using a Bioinspired Tactile Sensing Module", IEEE Int. Symp. Robotics

and Sensor Environments, Ottawa, pp. 177-182, 2019, NEW.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing Module for Surface Characterization", MDPI

Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, IEEE Sensors, vol.17, issue 11, pp. 3231-3243,

Apr. 2017.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing

Module", 3rd Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi:

10.3390/ecsa-3-E007

•

T.E.A. Oliveira, V.P. Fonseca, A.-M. Cretu, E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, UOttawa Graduate and Research

Day, University of Ottawa, Mar. 2016 (Research Poster Prize in Electrical Engineering, first place; IEEE Research Poster Prize, first

place).

Robots are expected to recognize the properties of objects in order to handle them safely and efficiently in a variety of applications, such as health and elder

care, manufacturing, or high-risk environments. This project explores the issue

of surface characterization by monitoring the signals acquired by a novel bio-

them safely and efficiently in a variety of applications, such as health and elder

care, manufacturing, or high-risk environments. This project explores the issue

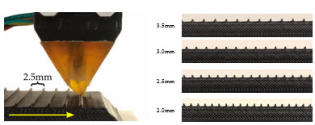

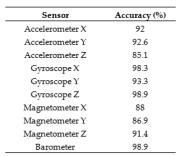

of surface characterization by monitoring the signals acquired by a novel bio- inspired tactile probe in contact with ridged surfaces. The tactile module

inspired tactile probe in contact with ridged surfaces. The tactile module comprises a 9-DOF MEMS MARG (Magnetic, Angular Rate, and Gravity)

comprises a 9-DOF MEMS MARG (Magnetic, Angular Rate, and Gravity) system and a deep MEMS pressure sensor embedded in a compliant structure

that mimics the function and the organization of mechanoreceptors in human

system and a deep MEMS pressure sensor embedded in a compliant structure

that mimics the function and the organization of mechanoreceptors in human skin as well as the hardness of the human skin. When the modules tip slides over

a surface, the MARG unit vibrates and the deep pressure sensor captures the overall normal force

skin as well as the hardness of the human skin. When the modules tip slides over

a surface, the MARG unit vibrates and the deep pressure sensor captures the overall normal force exerted. The module is evaluated in two experiments. The first experiment compares the frequency

exerted. The module is evaluated in two experiments. The first experiment compares the frequency content of the data collected in two setups: one when the module is mounted over a linear motion carriage

that slides four grating patterns at constant velocities; the second when the module is carried by a robotic

finger in contact with the same grating patterns while performing a sliding motion, similar to the exploratory

motion employed by humans to detect object roughness. As expected, in the linear setup, the magnitude

spectrum of the sensors' output shows that the module can detect the applied stimuli with frequencies

content of the data collected in two setups: one when the module is mounted over a linear motion carriage

that slides four grating patterns at constant velocities; the second when the module is carried by a robotic

finger in contact with the same grating patterns while performing a sliding motion, similar to the exploratory

motion employed by humans to detect object roughness. As expected, in the linear setup, the magnitude

spectrum of the sensors' output shows that the module can detect the applied stimuli with frequencies ranging from 3.66Hz to 11.54Hz with an overall maximum error of ±0.1Hz. The second experiment shows

how localized features extracted from the data collected by the robotic finger setup over seven synthetic

ranging from 3.66Hz to 11.54Hz with an overall maximum error of ±0.1Hz. The second experiment shows

how localized features extracted from the data collected by the robotic finger setup over seven synthetic shapes can be used to classify them. The classification method consists on applying multiscale principal

components analysis prior to the classification with a multilayer neural network. Achieved accuracies from 85.1% to 98.9% for the various

shapes can be used to classify them. The classification method consists on applying multiscale principal

components analysis prior to the classification with a multilayer neural network. Achieved accuracies from 85.1% to 98.9% for the various sensor types demonstrate the usefulness of traditional MEMS as tactile sensors embedded into flexible substrates.

Publications:

sensor types demonstrate the usefulness of traditional MEMS as tactile sensors embedded into flexible substrates.

Publications:  •

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing Module for Tactile Surface Characterization",

MPDI Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing

Module", 3rd Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi:

10.3390/ecsa-3-E007.

The project addresses the issues of the design and implementation of an

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing Module for Tactile Surface Characterization",

MPDI Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing

Module", 3rd Int. Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi:

10.3390/ecsa-3-E007.

The project addresses the issues of the design and implementation of an automated framework that provides the necessary information to the controller of a

robotic hand to ensure safe model-based 3D deformable object manipulation.

automated framework that provides the necessary information to the controller of a

robotic hand to ensure safe model-based 3D deformable object manipulation. Measurements corresponding to the interaction force at the level of the fingertips

Measurements corresponding to the interaction force at the level of the fingertips and to the position of the fingertips of a three-fingered robotic hand are associated

with the contours of a deformed object tracked in a series of images using neural-

network approaches. The resulting model not only captures the behavior of the

and to the position of the fingertips of a three-fingered robotic hand are associated

with the contours of a deformed object tracked in a series of images using neural-

network approaches. The resulting model not only captures the behavior of the object but is also able to predict its behavior for previously unseen interactions

object but is also able to predict its behavior for previously unseen interactions without any assumption on the object’s material. Such models allow the controller

of the robotic hand to achieve better controlled grasp and more elaborate

without any assumption on the object’s material. Such models allow the controller

of the robotic hand to achieve better controlled grasp and more elaborate manipulation capabilities.

Publications:

manipulation capabilities.

Publications: •

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Soft Object Deformation Monitoring and Learning for Model-Based Robotic Hand

Manipulation”, IEEE Trans. Systems, Man and Cybernetics - Part B, vol. 42, no. 3, Jun. 2012.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Learning and Prediction of Soft Object Deformation using Visual Analysis of Robot

Interaction”, Proc. Int. Symp. Visual Computing, ISVC2010, Las Vegas, Nevada, USA, G. Bebis et al. (Eds), LNCS 6454, pp. 232-241,

Springer, 2010.

•

F. Khalil, P. Payeur, and A.-M. Cretu, “Integrated Multisensory Robotic Hand System for Deformable Object Manipulation”, Proc. Int.

Conf. Robotics and Applications, pp. 159-166, Cambridge, Massachusetts, US, 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Estimation of Deformable Object Properties from Visual Data and Robotic Hand

Interaction Measurements for Virtualized Reality Applications ”, Proc. IEEE Int. Symp. Haptic Audio Visual Environments and Their

Applications, pp.168-173, Phoenix, AZ, Oct. 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Deformable Object Segmentation and Contour Tracking in Image Sequences Using

Unsupervised Networks”, Proc. Canadian Conf. Computer and Robot Vision, pp. 277-284, Ottawa, Canada, May 2010.

Performing tasks with a robot hand often requires a

complete knowledge of the manipulated object,

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Soft Object Deformation Monitoring and Learning for Model-Based Robotic Hand

Manipulation”, IEEE Trans. Systems, Man and Cybernetics - Part B, vol. 42, no. 3, Jun. 2012.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Learning and Prediction of Soft Object Deformation using Visual Analysis of Robot

Interaction”, Proc. Int. Symp. Visual Computing, ISVC2010, Las Vegas, Nevada, USA, G. Bebis et al. (Eds), LNCS 6454, pp. 232-241,

Springer, 2010.

•

F. Khalil, P. Payeur, and A.-M. Cretu, “Integrated Multisensory Robotic Hand System for Deformable Object Manipulation”, Proc. Int.

Conf. Robotics and Applications, pp. 159-166, Cambridge, Massachusetts, US, 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Estimation of Deformable Object Properties from Visual Data and Robotic Hand

Interaction Measurements for Virtualized Reality Applications ”, Proc. IEEE Int. Symp. Haptic Audio Visual Environments and Their

Applications, pp.168-173, Phoenix, AZ, Oct. 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Deformable Object Segmentation and Contour Tracking in Image Sequences Using

Unsupervised Networks”, Proc. Canadian Conf. Computer and Robot Vision, pp. 277-284, Ottawa, Canada, May 2010.

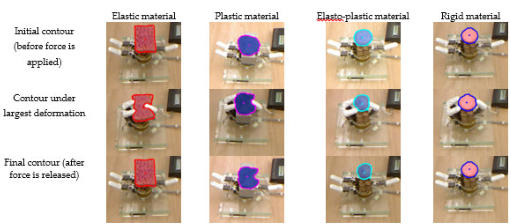

Performing tasks with a robot hand often requires a

complete knowledge of the manipulated object, including its properties (shape, rigidity, surface

including its properties (shape, rigidity, surface texture) and its location in the environment, in order

texture) and its location in the environment, in order to ensure safe and efficient manipulation. While well-

established procedures exist for the manipulation of

rigid objects, as well as several approaches for the

to ensure safe and efficient manipulation. While well-

established procedures exist for the manipulation of

rigid objects, as well as several approaches for the manipulation of linear or planar deformable objects

such as ropes or fabric, research addressing the

manipulation of linear or planar deformable objects

such as ropes or fabric, research addressing the characterization of deformable objects occupying a

characterization of deformable objects occupying a volume remains relatively limited. The project

volume remains relatively limited. The project proposes an approach for tracking the deformation

proposes an approach for tracking the deformation of non-rigid objects under robot hand manipulation

of non-rigid objects under robot hand manipulation using RGB-D data. The purpose is to automatically

using RGB-D data. The purpose is to automatically classify deformable objects as rigid, elastic, plastic,

classify deformable objects as rigid, elastic, plastic, or elasto-plastic, based on the material they are made of, and to support recognition of the category of such objects through a robotic probing

process in order to enhance manipulation capabilities. The proposed approach combines advantageously classical color and depth image

processing techniques and proposes a novel combination of the fast level set method with a log-polar mapping of the visual data to robustly

detect and track the contour of a deformable object in a RGB-D data stream. Dynamic time warping is employed to characterize the object

or elasto-plastic, based on the material they are made of, and to support recognition of the category of such objects through a robotic probing

process in order to enhance manipulation capabilities. The proposed approach combines advantageously classical color and depth image

processing techniques and proposes a novel combination of the fast level set method with a log-polar mapping of the visual data to robustly

detect and track the contour of a deformable object in a RGB-D data stream. Dynamic time warping is employed to characterize the object properties independently from the varying length of the tracked contour as the object deforms. The proposed solution achieves a classification

rate over all categories of material of up to 98.3%. When integrated in the control loop of a robot hand, it can contribute to ensure stable grasp,

and safe manipulation capability that will preserve the physical integrity of the object.

Collaborators:

properties independently from the varying length of the tracked contour as the object deforms. The proposed solution achieves a classification

rate over all categories of material of up to 98.3%. When integrated in the control loop of a robot hand, it can contribute to ensure stable grasp,

and safe manipulation capability that will preserve the physical integrity of the object.

Collaborators: Publications:

Publications:  •

F. Hui, P. Payeur and A.-M. Cretu, "Visual Tracking and Classification of Soft Object Deformation under Robot Hand Interaction", MDPI

Robotics, vol. 6, issue 1, art. 5, 2017, doi: 10.3390/robotics6010005.

•

F. Hui, P. Payeur, and A.-M. Cretu, "In-Hand Object Material Characterization with Fast Level Set in Log-Polar Domain and Dynamic

Time Warping", IEEE Int. Conf. Instrumentation and Measurement Technology, pp. 730-735,Torino, Italy, May 2017.

This project explores some aspects of intelligent sensing for advanced

•

F. Hui, P. Payeur and A.-M. Cretu, "Visual Tracking and Classification of Soft Object Deformation under Robot Hand Interaction", MDPI

Robotics, vol. 6, issue 1, art. 5, 2017, doi: 10.3390/robotics6010005.

•

F. Hui, P. Payeur, and A.-M. Cretu, "In-Hand Object Material Characterization with Fast Level Set in Log-Polar Domain and Dynamic

Time Warping", IEEE Int. Conf. Instrumentation and Measurement Technology, pp. 730-735,Torino, Italy, May 2017.

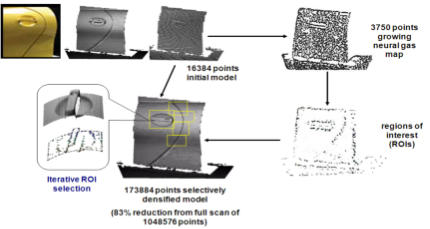

This project explores some aspects of intelligent sensing for advanced robotic applications, with the main objective of designing innovative

robotic applications, with the main objective of designing innovative approaches for automatic selection of regions of observation for fixed and

approaches for automatic selection of regions of observation for fixed and mobile sensors to collect only relevant measurements without human

mobile sensors to collect only relevant measurements without human guidance. The proposed neural gas network solution selects regions of

guidance. The proposed neural gas network solution selects regions of interest for further sampling from a cloud of sparsely collected 3D

interest for further sampling from a cloud of sparsely collected 3D measurements. The technique automatically determines bounded areas

measurements. The technique automatically determines bounded areas where sensing is required at higher resolution to accurately map 3D

where sensing is required at higher resolution to accurately map 3D surfaces. Therefore it provides significant benefits over brute force strategies

as scanning time is reduced and the size of the dataset is kept manageable.

Collaborators/ Support:

surfaces. Therefore it provides significant benefits over brute force strategies

as scanning time is reduced and the size of the dataset is kept manageable.

Collaborators/ Support:  Publications:

Publications: •

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective

Acquisition of Depth Measurements: an Experimental Evaluation", Int. Conf. Advanced Concepts for Intelligent Vision Systems,

Poznan, Poland, J. Blanc-Talon et al. (Eds.) LNCS 8192, pp. 389-401, 2013.

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective Acquisition of Depth Measurements in Machine Perception",

IEEE Int. Conf. Systems, Man, and Cybernetics, Manchester, UK, pp.876-881, 2013.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Range Data Acquisition Driven by Neural Gas Networks”, IEEE Trans.

Instrumentation and Measurement, vol. 58, no. 6, pp. 2634-2642, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Neural Gas and Growing Neural Gas Networks for Selective 3D Sensing: A Comparative

Study”, Sensors & Transducers, vol. 5, pp. 119-134, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Tactile Data Acquisition on 3D Deformable Objects for Virtualized Reality

Applications”, Proc. IEEE Int. Workshop Computational Intelligence in Virtual Environments, pp.14-19, Nashville, TN, USA, Apr. 2009

•

A.-M. Cretu, E.M. Petriu, and P. Payeur, “Growing Neural Gas Networks for Selective 3D Scanning”, Proc. IEEE Int. Workshop on

Robotic and Sensors Environments, pp. 108-113, Ottawa, Canada, Oct. 2008.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Vision Sensing Based on Neural Gas Network”, Proc. IEEE Int. Conf.

Instrumentation and Measurement Technology, pp. 478-483, Vancouver, Canada, May 2008 (Best Student Paper Award).

Controlling robotic interventions on small devices creates important challenges on the sensing stage as

resolution limitations of non-contact sensors are rapidly reached. The integration of haptic sensors to

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective

Acquisition of Depth Measurements: an Experimental Evaluation", Int. Conf. Advanced Concepts for Intelligent Vision Systems,

Poznan, Poland, J. Blanc-Talon et al. (Eds.) LNCS 8192, pp. 389-401, 2013.

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective Acquisition of Depth Measurements in Machine Perception",

IEEE Int. Conf. Systems, Man, and Cybernetics, Manchester, UK, pp.876-881, 2013.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Range Data Acquisition Driven by Neural Gas Networks”, IEEE Trans.

Instrumentation and Measurement, vol. 58, no. 6, pp. 2634-2642, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Neural Gas and Growing Neural Gas Networks for Selective 3D Sensing: A Comparative

Study”, Sensors & Transducers, vol. 5, pp. 119-134, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Tactile Data Acquisition on 3D Deformable Objects for Virtualized Reality

Applications”, Proc. IEEE Int. Workshop Computational Intelligence in Virtual Environments, pp.14-19, Nashville, TN, USA, Apr. 2009

•

A.-M. Cretu, E.M. Petriu, and P. Payeur, “Growing Neural Gas Networks for Selective 3D Scanning”, Proc. IEEE Int. Workshop on

Robotic and Sensors Environments, pp. 108-113, Ottawa, Canada, Oct. 2008.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Vision Sensing Based on Neural Gas Network”, Proc. IEEE Int. Conf.

Instrumentation and Measurement Technology, pp. 478-483, Vancouver, Canada, May 2008 (Best Student Paper Award).

Controlling robotic interventions on small devices creates important challenges on the sensing stage as

resolution limitations of non-contact sensors are rapidly reached. The integration of haptic sensors to refine information provided by vision sensors appears as a very promising approach in the development

of autonomous robotic systems as it reproduces the multiplicity of sensing sources used by humans. This

project proposes an intelligent multimodal sensor system developed to enhance the haptic-control of

refine information provided by vision sensors appears as a very promising approach in the development

of autonomous robotic systems as it reproduces the multiplicity of sensing sources used by humans. This

project proposes an intelligent multimodal sensor system developed to enhance the haptic-control of robotic manipulations of small 3D objects. The proposed system combines a 16x16 array of Force

robotic manipulations of small 3D objects. The proposed system combines a 16x16 array of Force Sensing Resistor

(FSR) elements to refine 3D shape measurements in selected areas previously monitored with a laser

Sensing Resistor

(FSR) elements to refine 3D shape measurements in selected areas previously monitored with a laser range finder. Using the integrated technologies, the sensor system is able to recognize small-size objects

that cannot be accurately differentiated through range measurements and provides an estimate of the

range finder. Using the integrated technologies, the sensor system is able to recognize small-size objects

that cannot be accurately differentiated through range measurements and provides an estimate of the objects orientation. Characteristics of the system are demonstrated in the context of a robotic intervention

that requires fine objects to be localized and identified for their shape and orientation.

Publications:

objects orientation. Characteristics of the system are demonstrated in the context of a robotic intervention

that requires fine objects to be localized and identified for their shape and orientation.

Publications:  •

P. Payeur, C. Pasca, A.-M. Cretu, and E.M. Petriu, “Intelligent Haptic Sensor System for Robotic

Manipulation”, IEEE Trans. Instrumentation and Measurement, vol. 54, no. 4, pp. 1583-1592, 2005.

•

C. Pasca, P. Payeur, E.M. Petriu, A.-M. Cretu, “Intelligent Haptic Sensor System for Robotic Manipulation”, Proc. IEEE Int. Conf.

•

P. Payeur, C. Pasca, A.-M. Cretu, and E.M. Petriu, “Intelligent Haptic Sensor System for Robotic

Manipulation”, IEEE Trans. Instrumentation and Measurement, vol. 54, no. 4, pp. 1583-1592, 2005.

•

C. Pasca, P. Payeur, E.M. Petriu, A.-M. Cretu, “Intelligent Haptic Sensor System for Robotic Manipulation”, Proc. IEEE Int. Conf. Instrumentation and Measurement Technology, pp. 279-284, Italy, 2004.

•

E. M. Petriu, S.K.S. Yeung, S.R. Das, A.-M. Cretu, and H.J.W. Spoelder, “Robotic Tactile Recognition of Pseudo-Random Encoded

Instrumentation and Measurement Technology, pp. 279-284, Italy, 2004.

•

E. M. Petriu, S.K.S. Yeung, S.R. Das, A.-M. Cretu, and H.J.W. Spoelder, “Robotic Tactile Recognition of Pseudo-Random Encoded Objects”, IEEE Trans. Instrumentation and Measurement, vol. 53, no. 5, pp. 1425-1432, 2004.

This project addresses issues inherent to the design of navigation planning and control systems required for

Objects”, IEEE Trans. Instrumentation and Measurement, vol. 53, no. 5, pp. 1425-1432, 2004.

This project addresses issues inherent to the design of navigation planning and control systems required for adaptive monitoring of pollutants in inland waters. It proposes a new system for estimating water

adaptive monitoring of pollutants in inland waters. It proposes a new system for estimating water quality, in particular the chlorophyll-A concentration, by using satellite remote sensing data. The aim

is to develop an intelligent model based on supervised learning, with the goal of improving the

quality, in particular the chlorophyll-A concentration, by using satellite remote sensing data. The aim

is to develop an intelligent model based on supervised learning, with the goal of improving the precision of the evaluation of chlorophyll-A concentration. To achieve this, an intelligent system

precision of the evaluation of chlorophyll-A concentration. To achieve this, an intelligent system based on statistical learning is employed to classify the waters a priori, before estimating the

based on statistical learning is employed to classify the waters a priori, before estimating the chlorophyll-A concentration with neural network models. A control architecture is proposed to guide

the trajectory of an aquatic platform to collect in-situ measurements It uses a multi-model

chlorophyll-A concentration with neural network models. A control architecture is proposed to guide

the trajectory of an aquatic platform to collect in-situ measurements It uses a multi-model classification/regression system to determine and forecast the spatial distribution of chlorophyll-A.

At the same time, the proposed architecture features a cost optimizing path planner. The approach

is validated using data collected on Lake Winnipeg in Canada.

Collaborators:

classification/regression system to determine and forecast the spatial distribution of chlorophyll-A.

At the same time, the proposed architecture features a cost optimizing path planner. The approach

is validated using data collected on Lake Winnipeg in Canada.

Collaborators: Publication:

•

F. Halal, P. Pedrocca, T. Hirose, A.-M. Cretu, M. Zaremba, "Remote-Sensing Based Adaptive Path Planning for an Aquatic Platform to

Publication:

•

F. Halal, P. Pedrocca, T. Hirose, A.-M. Cretu, M. Zaremba, "Remote-Sensing Based Adaptive Path Planning for an Aquatic Platform to Monitor Water Quality", IEEE Symp. Robotic and Sensors Environments, pp. 43-48, Timisoara, Romania, Oct. 2014.

This project addresses the problem of node selection using computational intelligence techniques for a risk-aware

robotic sensor networks applied to critical infrastructure protection. The goal is to maintain a secure perimeter around

a critical infrastructure, which is best maintained by detecting high-risk network events and mitigate them through a

response involving the most suitable robotic assets.

Collaborators:

Monitor Water Quality", IEEE Symp. Robotic and Sensors Environments, pp. 43-48, Timisoara, Romania, Oct. 2014.

This project addresses the problem of node selection using computational intelligence techniques for a risk-aware

robotic sensor networks applied to critical infrastructure protection. The goal is to maintain a secure perimeter around

a critical infrastructure, which is best maintained by detecting high-risk network events and mitigate them through a

response involving the most suitable robotic assets.

Collaborators: Publications:

Publications: •

J. McCausland, R. Abielmona, R. Falcon, A.-M. Cretu and E.M. Petriu, "On the Role of Multi-Objective Optimization in Risk Mitigation

•

J. McCausland, R. Abielmona, R. Falcon, A.-M. Cretu and E.M. Petriu, "On the Role of Multi-Objective Optimization in Risk Mitigation for Critical Infrastructures with Robotic Sensor Networks", accepted for publication, ACM Conference Companion on Genetic and

for Critical Infrastructures with Robotic Sensor Networks", accepted for publication, ACM Conference Companion on Genetic and Evolutionary Computation, Vancouver, Canada, pp. 1269-1276, July 2014.

•

J. McCausland, R. Abielmona, A. -M. Cretu, Rafael Falcon, and E.M. Petriu, “A Proactive Risk-Aware Robotic Sensor Network for

Evolutionary Computation, Vancouver, Canada, pp. 1269-1276, July 2014.

•

J. McCausland, R. Abielmona, A. -M. Cretu, Rafael Falcon, and E.M. Petriu, “A Proactive Risk-Aware Robotic Sensor Network for Critical Infrastructure Protection”, poster session, 2013 Annual Research Review Healthcare Support through Information Technology

Enhancements (hSITE), Montreal, QC, 18 Nov. 2013,

•

J. McCausland, R. Abielmona, R. Falcon, A.-M. Cretu and E.M. Petriu, "Auction-Based Node Selection of Optimal and Concurrent

Critical Infrastructure Protection”, poster session, 2013 Annual Research Review Healthcare Support through Information Technology

Enhancements (hSITE), Montreal, QC, 18 Nov. 2013,

•

J. McCausland, R. Abielmona, R. Falcon, A.-M. Cretu and E.M. Petriu, "Auction-Based Node Selection of Optimal and Concurrent Responses for a Risk-Aware Robotic Sensor Network", IEEE Int. Symp. Robotic and Sensors Environments, Washington, US, pp. 136-

141. 21-23 Oct. 2013.

Installation of large solar photovoltaic (PV) systems is becoming common in cold climate regions with significant

Responses for a Risk-Aware Robotic Sensor Network", IEEE Int. Symp. Robotic and Sensors Environments, Washington, US, pp. 136-

141. 21-23 Oct. 2013.

Installation of large solar photovoltaic (PV) systems is becoming common in cold climate regions with significant amount of snowfall. To better understand the effect of degradation in electrical performance of PV cells associated with

the presence of snowfall, accurate modeling of snow-covered photovoltaic modules is vitally necessary. In this paper, a

novel methodology of PV modeling is proposed to represent the instantaneous electrical characteristics of uniformly

amount of snowfall. To better understand the effect of degradation in electrical performance of PV cells associated with

the presence of snowfall, accurate modeling of snow-covered photovoltaic modules is vitally necessary. In this paper, a

novel methodology of PV modeling is proposed to represent the instantaneous electrical characteristics of uniformly snow-covered PV modules. The attenuation of transmitted solar radiation penetrating a layer of snow is rigorously

snow-covered PV modules. The attenuation of transmitted solar radiation penetrating a layer of snow is rigorously estimated based on the Giddings and LaChapelle theory. This approach introduced the level of radiation that reaches

to the surface of PV module through snowpack, significantly affected by the snow properties and thickness. The

estimated based on the Giddings and LaChapelle theory. This approach introduced the level of radiation that reaches

to the surface of PV module through snowpack, significantly affected by the snow properties and thickness. The proposed modeling approach is based on the popular single-diode-five-parameter equivalent circuit model. The

proposed modeling approach is based on the popular single-diode-five-parameter equivalent circuit model. The parameters of the model are updated through instantaneous measurements of voltage and current that are optimized

by the particle swarm optimization (PSO) algorithm. The proposed approach for modeling snow-covered PV modules

was successfully validated in outdoor tests using three different types of PV module technologies typically used in

parameters of the model are updated through instantaneous measurements of voltage and current that are optimized

by the particle swarm optimization (PSO) algorithm. The proposed approach for modeling snow-covered PV modules

was successfully validated in outdoor tests using three different types of PV module technologies typically used in North America's PV farms under different cold weather conditions. In addition, the validity of proposed model was

North America's PV farms under different cold weather conditions. In addition, the validity of proposed model was investigated using real data obtained from the SCADA system of a 12-MW grid-connected PV farm. The proposed

investigated using real data obtained from the SCADA system of a 12-MW grid-connected PV farm. The proposed model can help improving PV performance under snow conditions and can be considered a powerful tool for the design

and selection of PV modules subjected to snow accretion.

Publications:

model can help improving PV performance under snow conditions and can be considered a powerful tool for the design

and selection of PV modules subjected to snow accretion.

Publications:  •

M. Khenar, K. Hosseini, S. Taheri, A.-M. Cretu, E. Pouresmaeil, and H. Taheri, "Particle Swarm Optimization-

based Model and Analysis of Photovoltaic Module Characteristics in Snowy Conditions", accepted for

publication, IET Renewable Power Generation, Mar. 2019, NEW.

The inherent complex nature of the wastewater treatment process,

the lack of proper knowledge and description of the biological

•

M. Khenar, K. Hosseini, S. Taheri, A.-M. Cretu, E. Pouresmaeil, and H. Taheri, "Particle Swarm Optimization-

based Model and Analysis of Photovoltaic Module Characteristics in Snowy Conditions", accepted for

publication, IET Renewable Power Generation, Mar. 2019, NEW.

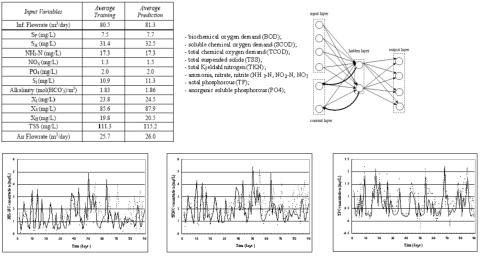

The inherent complex nature of the wastewater treatment process,

the lack of proper knowledge and description of the biological phenomena and the large fluctuations in time of the numerous

phenomena and the large fluctuations in time of the numerous parameters implied (flow-rates and nutrients loadings) makes the

automatic control of a wastewater plant a complicated and difficult

problem to tackle. The use of online sensors for continuous

parameters implied (flow-rates and nutrients loadings) makes the

automatic control of a wastewater plant a complicated and difficult

problem to tackle. The use of online sensors for continuous measurement of wastewater components is prone to unpredictable

breakdowns. Low nutrient conditions in a wastewater treatment

measurement of wastewater components is prone to unpredictable

breakdowns. Low nutrient conditions in a wastewater treatment plant may cause the failure of the effective nitrogen and

plant may cause the failure of the effective nitrogen and phosphorus removal for a considerable period. This project

phosphorus removal for a considerable period. This project proposes neural-network modeling approaches for the model-

proposes neural-network modeling approaches for the model- based control of a wastewater treatment plant in terms of air flow-

rate and municipal wastewater components.

Publications:

based control of a wastewater treatment plant in terms of air flow-

rate and municipal wastewater components.

Publications:  •

K-Y. Ko, G.G. Patry, A.-M. Cretu, and E.M. Petriu, “Neural

Network Model of Municipal Wastewater Components for a

Wastewater Treatment Plant”, Proc. IEEE Int. Workshop on

Advanced Environmental Sensing and Monitoring Techniques, pp. 23-28, Como, Italy, Jul. 2003.

•

K.-Y. Ko, G.G. Patry, A.-M. Cretu, E.M. Petriu, “Neural Network Model for Wastewater Treatment Plant Control”, Proc. IEEE Int.

Workshop on Soft Computing Techniques in Instrumentation, Measurement and Related Applications, pp. 38-43, May 2003.

•

K-Y. Ko, G.G. Patry, A.-M. Cretu, and E.M. Petriu, “Neural

Network Model of Municipal Wastewater Components for a

Wastewater Treatment Plant”, Proc. IEEE Int. Workshop on

Advanced Environmental Sensing and Monitoring Techniques, pp. 23-28, Como, Italy, Jul. 2003.

•

K.-Y. Ko, G.G. Patry, A.-M. Cretu, E.M. Petriu, “Neural Network Model for Wastewater Treatment Plant Control”, Proc. IEEE Int.

Workshop on Soft Computing Techniques in Instrumentation, Measurement and Related Applications, pp. 38-43, May 2003.

Research

© Ana-Maria Cretu 2019

Ana-Maria Cretu

Risk-aware wireless sensor network for critical infrastructure protection

Remote-sensing based adaptive path planning for a ship to monitor water quality

Tactile surface characterization and recognition

Pattern classification and recognition from tactile data

Visual attention guided tactile object recognition

Multimodal biologically-inspired tactile sensing

Deformable object tracking and modeling for robotic hand manipulation

Deformable object tracking for material characterization

Selective range data acquisition

Neural network models for wastewater treatment plant control

PSO-based study of photovoltaic panels in cold conditions