Research Direction:

Human-machine interaction. Multisensory acquisition & modeling of 3D objects Human-machine interfaces Virtual reality has already been successfully used as a therapeutic tool for the treatment of various phobias. Due to advances in the 3D graphics and in the computing power, the real-time visual rendering of a virtual world poses no significant problems nowadays. However, the haptic interaction with such environments remains a challenge. Therefore one of the projects in

nowadays. However, the haptic interaction with such environments remains a challenge. Therefore one of the projects in collaboration with the Cyberpsychology lab of UQO explores the haptic interaction with a dedicated virtual environment in spider

phobia treatment to elicit disgust, as changes in fear and in disgust were shown to be highly associated with the observed

collaboration with the Cyberpsychology lab of UQO explores the haptic interaction with a dedicated virtual environment in spider

phobia treatment to elicit disgust, as changes in fear and in disgust were shown to be highly associated with the observed decline in arachnophobic symptoms. A dedicated virtual environment is programmed within which a Novint Falcon haptic device

is used for the interaction with a virtual spider. Other projects aim at the development of dedicated virtual environments and

decline in arachnophobic symptoms. A dedicated virtual environment is programmed within which a Novint Falcon haptic device

is used for the interaction with a virtual spider. Other projects aim at the development of dedicated virtual environments and their evaluation as therapeutic tools for phobia treatment.

Collaborators:

their evaluation as therapeutic tools for phobia treatment.

Collaborators: Publications:

Publications:  •

M. Laforest, S. Bouchard, A.-M. Cretu, and O. Mesly, "Inducing an Anxiety Response Using a Contaminated Virtual Environment: Validation of a

Therapeutic Tool for Obsessive-Compulsive Disorder", Frontiers in ICT (Information and Communication Technologies): Virtual Environments, vol. 3,

article 18, Sep. 2016, doi: 10.3389/fict.2016.00018.

•

M. Cavrag, G. Larivière, A.-M. Cretu, and S. Bouchard, "Interaction with Virtual Spiders for Eliciting Disgust in the Treatment of Phobias", IEEE

Symp. Haptic Audio-Visual Environments and Games, pp. 29-34, Dallas, Texas, US, Oct. 2014.

One of the tracks of the project aims at the development a natural gesture interface that

•

M. Laforest, S. Bouchard, A.-M. Cretu, and O. Mesly, "Inducing an Anxiety Response Using a Contaminated Virtual Environment: Validation of a

Therapeutic Tool for Obsessive-Compulsive Disorder", Frontiers in ICT (Information and Communication Technologies): Virtual Environments, vol. 3,

article 18, Sep. 2016, doi: 10.3389/fict.2016.00018.

•

M. Cavrag, G. Larivière, A.-M. Cretu, and S. Bouchard, "Interaction with Virtual Spiders for Eliciting Disgust in the Treatment of Phobias", IEEE

Symp. Haptic Audio-Visual Environments and Games, pp. 29-34, Dallas, Texas, US, Oct. 2014.

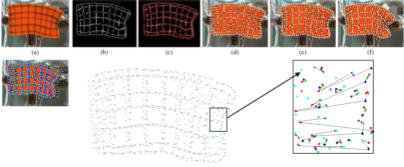

One of the tracks of the project aims at the development a natural gesture interface that tracks and recognizes in real-time static and dynamic hand gestures of a user based on

tracks and recognizes in real-time static and dynamic hand gestures of a user based on depth data collected by a Kinect sensor. A novel algorithm is proposed to improve the scanning

time in order to identify the first pixel on the hand contour within this space. Starting from this

depth data collected by a Kinect sensor. A novel algorithm is proposed to improve the scanning

time in order to identify the first pixel on the hand contour within this space. Starting from this pixel, a directional search algorithm allows for the identification of the entire hand contour. The

K-curvature algorithm is then employed to locate the fingertips over the contour, and dynamic

pixel, a directional search algorithm allows for the identification of the entire hand contour. The

K-curvature algorithm is then employed to locate the fingertips over the contour, and dynamic time warping s used to select gesture candidates and also to recognize gestures by comparing

an observed gesture with a series of pre-recorded reference gestures. Two possible applications

of this work are discussed and evaluated: one for interpretation of sign digits and popular

time warping s used to select gesture candidates and also to recognize gestures by comparing

an observed gesture with a series of pre-recorded reference gestures. Two possible applications

of this work are discussed and evaluated: one for interpretation of sign digits and popular gestures for a friendlier human-machine interaction, the other one for the natural control of a

gestures for a friendlier human-machine interaction, the other one for the natural control of a software interface.

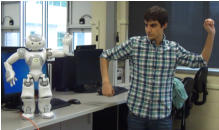

Another track of the project aims at the development of a system capable to control the arm movement of a

robot by mimicking the gestures of an actor captured by a markerless vision sensor. The Kinect for Xbox is

used to recuperate angle information at the level of the actor's arm and an interaction module transforms it

software interface.

Another track of the project aims at the development of a system capable to control the arm movement of a

robot by mimicking the gestures of an actor captured by a markerless vision sensor. The Kinect for Xbox is

used to recuperate angle information at the level of the actor's arm and an interaction module transforms it into a usable format for real-time robot arm control. To avoid self-collisions, the distance between the two arms

is computed in real-time and the motion is not executed if this distance becomes smaller the twice the

into a usable format for real-time robot arm control. To avoid self-collisions, the distance between the two arms

is computed in real-time and the motion is not executed if this distance becomes smaller the twice the diameter of the member. A software architecture is proposed and implemented for this purpose. The feasibility

of our approach is demonstrated on a NAO robot.

Publications:

diameter of the member. A software architecture is proposed and implemented for this purpose. The feasibility

of our approach is demonstrated on a NAO robot.

Publications:  •

G. Plouffe, P. Payeur, and A.-M. Cretu, "Real-Time Posture Control for a Robotic Manipulator Using Natural Human-Computer Interaction", MDPI

•

G. Plouffe, P. Payeur, and A.-M. Cretu, "Real-Time Posture Control for a Robotic Manipulator Using Natural Human-Computer Interaction", MDPI Int. Electron. Conf. Sensors and Applications, Proceedings 4(31), 2019, pp. 1:7, doi:10.3390/ecsa-5-05751, NEW.

•

G. Plouffe, and A.-M.Cretu, “Static and Dynamic Hand Gesture Recognition in Depth Data Using Dynamic Time Warping“, IEEE Trans.

Int. Electron. Conf. Sensors and Applications, Proceedings 4(31), 2019, pp. 1:7, doi:10.3390/ecsa-5-05751, NEW.

•

G. Plouffe, and A.-M.Cretu, “Static and Dynamic Hand Gesture Recognition in Depth Data Using Dynamic Time Warping“, IEEE Trans. Instrumentation and Measurement, vol. 65, no.2, pp. 305-316, Feb. 2016.

•

G. Plouffe, A.-M. Cretu, and P. Payeur, “Natural Human-Computer Interaction Using Hand Gestures”, IEEE Symp. Haptic Audio-Visual

Instrumentation and Measurement, vol. 65, no.2, pp. 305-316, Feb. 2016.

•

G. Plouffe, A.-M. Cretu, and P. Payeur, “Natural Human-Computer Interaction Using Hand Gestures”, IEEE Symp. Haptic Audio-Visual Environments and Games, pp. 57-62, Ottawa, ON, Oct. 2015.

•

S. Filiatrault, and A.-M. Cretu, "Human Arm Motion Imitation Using a Humanoid Robot", IEEE Int. Symp. Robotic and Sensors Environments, pp.

Environments and Games, pp. 57-62, Ottawa, ON, Oct. 2015.

•

S. Filiatrault, and A.-M. Cretu, "Human Arm Motion Imitation Using a Humanoid Robot", IEEE Int. Symp. Robotic and Sensors Environments, pp. 31-36, Timisoara, Romania, 2014.

Multisensory acquisition & modeling of 3D objects

The long-term goal of this research program is to develop enhanced sensing and modeling techniques for applications that require higher realism by

means of 3D object models based on a combination of real multisensory measurements, along with their acquisition, integration, representation and

31-36, Timisoara, Romania, 2014.

Multisensory acquisition & modeling of 3D objects

The long-term goal of this research program is to develop enhanced sensing and modeling techniques for applications that require higher realism by

means of 3D object models based on a combination of real multisensory measurements, along with their acquisition, integration, representation and interpretation.

The realistic representation of

interpretation.

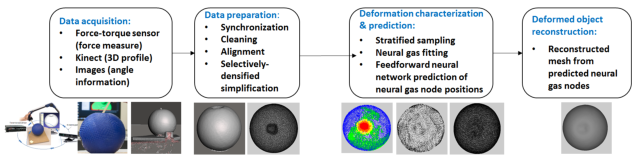

The realistic representation of deformations is still an active

deformations is still an active area of research, especially for

deformable objects whose

area of research, especially for

deformable objects whose behavior cannot be simply

behavior cannot be simply described in terms of elasticity

described in terms of elasticity parameters. This project

parameters. This project proposes a data-driven neural-

network-based approach for

proposes a data-driven neural-

network-based approach for capturing implicitly and

capturing implicitly and predicting deformations of an

predicting deformations of an object subject to external

object subject to external forces. Visual data, in form of

forces. Visual data, in form of 3D point clouds gathered by a

3D point clouds gathered by a Kinect sensor, is collected over an object while forces are exerted by means of the probing tip of a force-torque sensor. A novel approach based on neural

gas fitting is proposed to describe the particularities of a deformation over the selectively simplified 3D surface of the object, without requiring knowledge

on the object material. An alignment procedure, a distance-based clustering and inspiration from stratified sampling support this process. The resulting

representation is denser in the region of the deformation (an average of 96.6% perceptual similarity with the collected data in the deformed area), while still

preserving the object overall shape (86% similarity over the entire surface) and only using on average 40% of the number of vertices in the mesh. A series

of feedword neural networks is then trained to predict the mapping between the force parameters characterizing the interaction with the object and the

Kinect sensor, is collected over an object while forces are exerted by means of the probing tip of a force-torque sensor. A novel approach based on neural

gas fitting is proposed to describe the particularities of a deformation over the selectively simplified 3D surface of the object, without requiring knowledge

on the object material. An alignment procedure, a distance-based clustering and inspiration from stratified sampling support this process. The resulting

representation is denser in the region of the deformation (an average of 96.6% perceptual similarity with the collected data in the deformed area), while still

preserving the object overall shape (86% similarity over the entire surface) and only using on average 40% of the number of vertices in the mesh. A series

of feedword neural networks is then trained to predict the mapping between the force parameters characterizing the interaction with the object and the change in the object shape, as captured by the fitted neural gas nodes. This series of networks allows for the prediction of the deformation of an object

change in the object shape, as captured by the fitted neural gas nodes. This series of networks allows for the prediction of the deformation of an object when subject to unknown interactions.

Support:

Publications:

•

B. Tawbe and A.-M. Cretu, "Acquisition and Prediction of Soft Object Shape Using a Kinect and a Force-Torque Sensor", MDPI Sensors, vol. 17,

art. 1083, 2017, doi: 10.33903/s17051083.

•

B. Tawbe and A.-M. Cretu "Data-Driven Representation of Soft Deformable Objects Based on Force-Torque Data and 3D Vision Measurements",

3rd In Electron. Conf. Sensors and Applications, Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E006, doi: 10.3390/ecsa-3-E006.

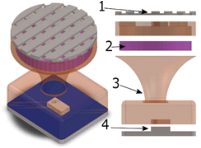

The ill-defined nature of tactile information turns tactile sensors into the last frontier to robots

that can handle everyday objects and interact with humans through contact. To overcome this

frontier many design aspects have to be considered: sensors placement,

when subject to unknown interactions.

Support:

Publications:

•

B. Tawbe and A.-M. Cretu, "Acquisition and Prediction of Soft Object Shape Using a Kinect and a Force-Torque Sensor", MDPI Sensors, vol. 17,

art. 1083, 2017, doi: 10.33903/s17051083.

•

B. Tawbe and A.-M. Cretu "Data-Driven Representation of Soft Deformable Objects Based on Force-Torque Data and 3D Vision Measurements",

3rd In Electron. Conf. Sensors and Applications, Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E006, doi: 10.3390/ecsa-3-E006.

The ill-defined nature of tactile information turns tactile sensors into the last frontier to robots

that can handle everyday objects and interact with humans through contact. To overcome this

frontier many design aspects have to be considered: sensors placement, electronic/mechanical hardware, methods to access and acquire signals, calibration, and

electronic/mechanical hardware, methods to access and acquire signals, calibration, and algorithms to process and interpret sensing data in real time. This project aims at the design

and hardware implementation of a bio-inspired tactile module that comprises a 32 taxels

algorithms to process and interpret sensing data in real time. This project aims at the design

and hardware implementation of a bio-inspired tactile module that comprises a 32 taxels array and a 9 DOF MARG (Magnetic, Angular Rate, and Gravity) sensor, a flexible compliant

array and a 9 DOF MARG (Magnetic, Angular Rate, and Gravity) sensor, a flexible compliant structure and a deep pressure sensor.

Publications:

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing

Module for Surface Characterization", MDPI Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, IEEE Sensors, vol.17, issue 11, pp. 3231-3243, Apr. 2017.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing Module", 3rd Int.

Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi: 10.3390/ecsa-3-E007

•

T.E.A. Oliveira, V.P. Fonseca, A.-M. Cretu, E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, UOttawa Graduate and Research Day, University

of Ottawa, Mar. 2016 (Research Poster Prize in Electrical Engineering, first place; IEEE Research Poster Prize, first place).

This project aims at proposing automated solutions for 3D object modeling at

structure and a deep pressure sensor.

Publications:

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, "A Multi-Modal Bio-Inspired Tactile Sensing

Module for Surface Characterization", MDPI Sensors, vol. 17, art. 1187, 2017, doi: 10.3390/s17061187.

•

T.E.A. Oliveira, A.-M. Cretu and E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, IEEE Sensors, vol.17, issue 11, pp. 3231-3243, Apr. 2017.

•

T.E.A. Oliveira, B.M.R. Lima, A.-M. Cretu and E.M. Petriu, "Tactile Profile Classification Using a Multimodal MEMs-Based Sensing Module", 3rd Int.

Electron. Conf. Sensors and Applications, 15-30. Nov. 2016, Sciforum El. Conf. Series, Vol. 3, 2016, E007, doi: 10.3390/ecsa-3-E007

•

T.E.A. Oliveira, V.P. Fonseca, A.-M. Cretu, E.M. Petriu, “Multi-Modal Bio-Inspired Tactile Module”, UOttawa Graduate and Research Day, University

of Ottawa, Mar. 2016 (Research Poster Prize in Electrical Engineering, first place; IEEE Research Poster Prize, first place).

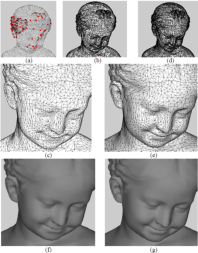

This project aims at proposing automated solutions for 3D object modeling at multiple resolutions in the context of virtual reality.

An original solution, based on an unsupervised neural network, is proposed to

guide the creation of selectively densified meshes. A neural gas network, adapts

its nodes during training to capture the embedded shape of the object. Regions

of interest are then identified as areas with higher density of nodes in the

multiple resolutions in the context of virtual reality.

An original solution, based on an unsupervised neural network, is proposed to

guide the creation of selectively densified meshes. A neural gas network, adapts

its nodes during training to capture the embedded shape of the object. Regions

of interest are then identified as areas with higher density of nodes in the adapted neural gas map. Meshes at different level of detail for an object, which

preserve these regions of interest, are constructed by adapting a classical

adapted neural gas map. Meshes at different level of detail for an object, which

preserve these regions of interest, are constructed by adapting a classical simplification algorithm. The simplification process will therefore only affect the

regions of lower interest, ensuring that the characteristics of an object are

simplification algorithm. The simplification process will therefore only affect the

regions of lower interest, ensuring that the characteristics of an object are preserved even at lower resolutions. A novel solution based on learning is

preserved even at lower resolutions. A novel solution based on learning is proposed to select the number of faces for the discrete models of an object at

proposed to select the number of faces for the discrete models of an object at different resolutions. Finally, selectively densified object meshes are

different resolutions. Finally, selectively densified object meshes are incorporated in a discrete level-of-detail method for presentation in virtual reality

applications.

Another track of the project aims at developing an original application of biologically-inspired visual attention for improved perception-based modeling of

3D objects. In an initial step, an adapted computational model of visual attention is used to identify areas of interest over the 3D shape of an object. Points

of interest are then identified as the centroids of these salient regions and integrated, along with their immediate n-neighbors, and using a simplification

algorithm, into a continuous distance-dependent level-of-details method.

Support:

incorporated in a discrete level-of-detail method for presentation in virtual reality

applications.

Another track of the project aims at developing an original application of biologically-inspired visual attention for improved perception-based modeling of

3D objects. In an initial step, an adapted computational model of visual attention is used to identify areas of interest over the 3D shape of an object. Points

of interest are then identified as the centroids of these salient regions and integrated, along with their immediate n-neighbors, and using a simplification

algorithm, into a continuous distance-dependent level-of-details method.

Support:  Publications:

Publications:  •

G. Rouhafzay and A.-M. Cretu, "Perceptually Improved 3D Object Representation Based on Guided Adaptive Weighting of Feature Channels of a

Visual-Attention Model", Springer 3D Research, vol. 9, issue 29, Sep. 2018, pp. 1-21, doi: 10.1007/s13319-018-0181-z.

•

G. Rouhafzay and A.-M. Cretu, "Selectively-Densified Mesh Construction for Virtual Reality Applications Using Visual Attention Derived Salient

Points", IEEE Int. Conf. Computational Intelligence and Virtual Environments for Measurement Systems and Applications, Annecy, France, pp. 99-

104, 2017.

•

M. Chagnon-Forget, G. Rouhafzay, A.-M. Cretu and S. Bouchard, "Enhanced Visual-Attention for Perceptually-Improved 3D Object Modeling in

Virtual Environments", 3D Research, vol. 7, issue 4, pp. 1-18, 2016.

•

A.-M. Cretu, M. Chagnon-Forget and P. Payeur, “Selectively Densified 3D Object Modeling Based on Regions of Interest Using Neural Gas

Networks”, accepted for publication, Soft Computing, 2016, doi: 10.1007/s00500-016-2132-z.

•

H. Monette-Thériault, A.-M. Cretu, and P. Payeur, "3D Object Modeling with Neural Gas Based Selective Densification of Surface Meshes”, IEEE

Int. Conf. Systems, Man, and Cybernetics, pp. 1373-1378, San Diego, US, 2014.

The project addresses the issues of the design and implementation of an

•

G. Rouhafzay and A.-M. Cretu, "Perceptually Improved 3D Object Representation Based on Guided Adaptive Weighting of Feature Channels of a

Visual-Attention Model", Springer 3D Research, vol. 9, issue 29, Sep. 2018, pp. 1-21, doi: 10.1007/s13319-018-0181-z.

•

G. Rouhafzay and A.-M. Cretu, "Selectively-Densified Mesh Construction for Virtual Reality Applications Using Visual Attention Derived Salient

Points", IEEE Int. Conf. Computational Intelligence and Virtual Environments for Measurement Systems and Applications, Annecy, France, pp. 99-

104, 2017.

•

M. Chagnon-Forget, G. Rouhafzay, A.-M. Cretu and S. Bouchard, "Enhanced Visual-Attention for Perceptually-Improved 3D Object Modeling in

Virtual Environments", 3D Research, vol. 7, issue 4, pp. 1-18, 2016.

•

A.-M. Cretu, M. Chagnon-Forget and P. Payeur, “Selectively Densified 3D Object Modeling Based on Regions of Interest Using Neural Gas

Networks”, accepted for publication, Soft Computing, 2016, doi: 10.1007/s00500-016-2132-z.

•

H. Monette-Thériault, A.-M. Cretu, and P. Payeur, "3D Object Modeling with Neural Gas Based Selective Densification of Surface Meshes”, IEEE

Int. Conf. Systems, Man, and Cybernetics, pp. 1373-1378, San Diego, US, 2014.

The project addresses the issues of the design and implementation of an automated framework that provides the necessary information to the controller of a

robotic hand to ensure safe model-based 3D deformable object manipulation.

automated framework that provides the necessary information to the controller of a

robotic hand to ensure safe model-based 3D deformable object manipulation. Measurements corresponding to the interaction force at the level of the fingertips

Measurements corresponding to the interaction force at the level of the fingertips and to the position of the fingertips of a three-fingered robotic hand are associated

with the contours of a deformed object tracked in a series of images using neural-

network approaches. The resulting model not only captures the behavior of the

and to the position of the fingertips of a three-fingered robotic hand are associated

with the contours of a deformed object tracked in a series of images using neural-

network approaches. The resulting model not only captures the behavior of the object but is also able to predict its behavior for previously unseen interactions

object but is also able to predict its behavior for previously unseen interactions without any assumption on the object’s material. Such models allow the controller

of the robotic hand to achieve better controlled grasp and more elaborate

without any assumption on the object’s material. Such models allow the controller

of the robotic hand to achieve better controlled grasp and more elaborate manipulation capabilities.

Publications:

manipulation capabilities.

Publications: •

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Soft Object Deformation Monitoring and Learning for Model-Based Robotic Hand Manipulation”, IEEE

Trans. Systems, Man and Cybernetics - Part B, vol. 42, no. 3, Jun. 2012.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Learning and Prediction of Soft Object Deformation using Visual Analysis of Robot Interaction”, Proc. Int.

Symp. Visual Computing, ISVC2010, Las Vegas, Nevada, USA, G. Bebis et al. (Eds), LNCS 6454, pp. 232-241, Springer, 2010.

•

F. Khalil, P. Payeur, and A.-M. Cretu, “Integrated Multisensory Robotic Hand System for Deformable Object Manipulation”, Proc. Int. Conf. Robotics

and Applications, pp. 159-166, Cambridge, Massachusetts, US, 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Estimation of Deformable Object Properties from Visual Data and Robotic Hand Interaction

Measurements for Virtualized Reality Applications ”, Proc. IEEE Int. Symp. Haptic Audio Visual Environments and Their Applications, pp.168-173,

Phoenix, AZ, Oct. 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Deformable Object Segmentation and Contour Tracking in Image Sequences Using

Unsupervised Networks”, Proc. Canadian Conf. Computer and Robot Vision, pp. 277-284, Ottawa, Canada, May 2010.

Performing tasks with a robot hand often requires

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Soft Object Deformation Monitoring and Learning for Model-Based Robotic Hand Manipulation”, IEEE

Trans. Systems, Man and Cybernetics - Part B, vol. 42, no. 3, Jun. 2012.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Learning and Prediction of Soft Object Deformation using Visual Analysis of Robot Interaction”, Proc. Int.

Symp. Visual Computing, ISVC2010, Las Vegas, Nevada, USA, G. Bebis et al. (Eds), LNCS 6454, pp. 232-241, Springer, 2010.

•

F. Khalil, P. Payeur, and A.-M. Cretu, “Integrated Multisensory Robotic Hand System for Deformable Object Manipulation”, Proc. Int. Conf. Robotics

and Applications, pp. 159-166, Cambridge, Massachusetts, US, 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Estimation of Deformable Object Properties from Visual Data and Robotic Hand Interaction

Measurements for Virtualized Reality Applications ”, Proc. IEEE Int. Symp. Haptic Audio Visual Environments and Their Applications, pp.168-173,

Phoenix, AZ, Oct. 2010.

•

A.-M. Cretu, P. Payeur, E. M. Petriu and F. Khalil, “Deformable Object Segmentation and Contour Tracking in Image Sequences Using

Unsupervised Networks”, Proc. Canadian Conf. Computer and Robot Vision, pp. 277-284, Ottawa, Canada, May 2010.

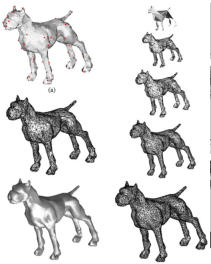

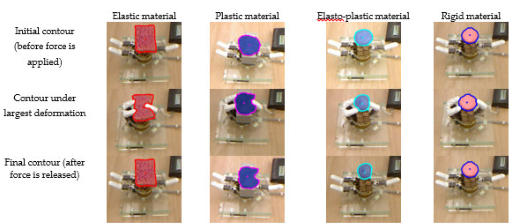

Performing tasks with a robot hand often requires a complete knowledge of the manipulated object,

a complete knowledge of the manipulated object, including its properties (shape, rigidity, surface

including its properties (shape, rigidity, surface texture) and its location in the environment, in

texture) and its location in the environment, in order to ensure safe and efficient manipulation.

order to ensure safe and efficient manipulation. While well-established procedures exist for the

While well-established procedures exist for the manipulation of rigid objects, as well as several

manipulation of rigid objects, as well as several approaches for the manipulation of linear or planar

deformable objects such as ropes or fabric,

approaches for the manipulation of linear or planar

deformable objects such as ropes or fabric, research addressing the characterization of

research addressing the characterization of deformable objects occupying a volume remains

deformable objects occupying a volume remains relatively limited. The project proposes an

relatively limited. The project proposes an approach for tracking the deformation of non-rigid

approach for tracking the deformation of non-rigid objects under robot hand manipulation using RGB-

D data. The purpose is to automatically classify

objects under robot hand manipulation using RGB-

D data. The purpose is to automatically classify deformable objects as rigid, elastic, plastic, or elasto-plastic, based on the material they are made of, and to support recognition of the category of such

objects through a robotic probing process in order to enhance manipulation capabilities. The proposed approach combines advantageously classical color

and depth image processing techniques and proposes a novel combination of the fast level set method with a log-polar mapping of the visual data to

deformable objects as rigid, elastic, plastic, or elasto-plastic, based on the material they are made of, and to support recognition of the category of such

objects through a robotic probing process in order to enhance manipulation capabilities. The proposed approach combines advantageously classical color

and depth image processing techniques and proposes a novel combination of the fast level set method with a log-polar mapping of the visual data to robustly detect and track the contour of a deformable object in a RGB-D data stream. Dynamic time warping is employed to characterize the object

robustly detect and track the contour of a deformable object in a RGB-D data stream. Dynamic time warping is employed to characterize the object properties independently from the varying length of the tracked contour as the object deforms. The proposed solution achieves a classification rate over all

categories of material of up to 98.3%. When integrated in the control loop of a robot hand, it can contribute to ensure stable grasp, and safe manipulation

capability that will preserve the physical integrity of the object.

Collaborators:

properties independently from the varying length of the tracked contour as the object deforms. The proposed solution achieves a classification rate over all

categories of material of up to 98.3%. When integrated in the control loop of a robot hand, it can contribute to ensure stable grasp, and safe manipulation

capability that will preserve the physical integrity of the object.

Collaborators: Publications:

Publications:  •

F. Hui, P. Payeur and A.-M. Cretu, "Visual Tracking and Classification of Soft Object Deformation under Robot Hand Interaction", MDPI Robotics,

vol. 6, issue 1, art. 5, 2017, doi: 10.3390/robotics6010005.

•

F. Hui, P. Payeur, and A.-M. Cretu, "In-Hand Object Material Characterization with Fast Level Set in Log-Polar Domain and Dynamic Time Warping",

IEEE Int. Conf. Instrumentation and Measurement Technology, pp. 730-735,Torino, Italy, May 2017.

This project explores some aspects of intelligent sensing for advanced

•

F. Hui, P. Payeur and A.-M. Cretu, "Visual Tracking and Classification of Soft Object Deformation under Robot Hand Interaction", MDPI Robotics,

vol. 6, issue 1, art. 5, 2017, doi: 10.3390/robotics6010005.

•

F. Hui, P. Payeur, and A.-M. Cretu, "In-Hand Object Material Characterization with Fast Level Set in Log-Polar Domain and Dynamic Time Warping",

IEEE Int. Conf. Instrumentation and Measurement Technology, pp. 730-735,Torino, Italy, May 2017.

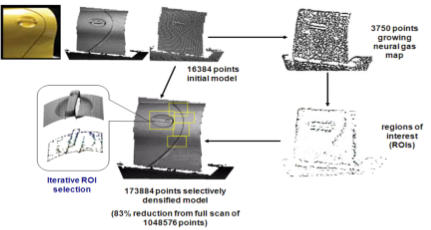

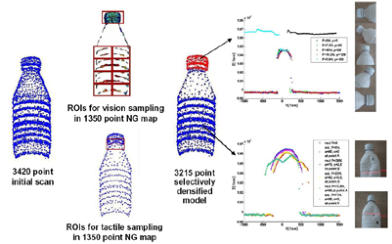

This project explores some aspects of intelligent sensing for advanced robotic applications, with the main objective of designing innovative

robotic applications, with the main objective of designing innovative approaches for automatic selection of regions of observation for fixed and

approaches for automatic selection of regions of observation for fixed and mobile sensors to collect only relevant measurements without human

mobile sensors to collect only relevant measurements without human guidance. The proposed neural gas network solution selects regions of

guidance. The proposed neural gas network solution selects regions of interest for further sampling from a cloud of sparsely collected 3D

interest for further sampling from a cloud of sparsely collected 3D measurements. The technique automatically determines bounded areas

measurements. The technique automatically determines bounded areas where sensing is required at higher resolution to accurately map 3D

where sensing is required at higher resolution to accurately map 3D surfaces. Therefore it provides significant benefits over brute force strategies

as scanning time is reduced and the size of the dataset is kept manageable.

Collaborators/ Support:

surfaces. Therefore it provides significant benefits over brute force strategies

as scanning time is reduced and the size of the dataset is kept manageable.

Collaborators/ Support:  Publications:

Publications: •

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective

Acquisition of Depth Measurements: an Experimental Evaluation", Int. Conf.

Advanced Concepts for Intelligent Vision Systems, Poznan, Poland, J. Blanc-Talon et al. (Eds.) LNCS 8192, pp. 389-401, 2013.

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective Acquisition of Depth Measurements in Machine Perception", IEEE Int. Conf.

Systems, Man, and Cybernetics, Manchester, UK, pp.876-881, 2013.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Range Data Acquisition Driven by Neural Gas Networks”, IEEE Trans. Instrumentation and

Measurement, vol. 58, no. 6, pp. 2634-2642, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Neural Gas and Growing Neural Gas Networks for Selective 3D Sensing: A Comparative Study”, Sensors

& Transducers, vol. 5, pp. 119-134, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Tactile Data Acquisition on 3D Deformable Objects for Virtualized Reality Applications”, Proc.

IEEE Int. Workshop Computational Intelligence in Virtual Environments, pp.14-19, Nashville, TN, USA, Apr. 2009

•

A.-M. Cretu, E.M. Petriu, and P. Payeur, “Growing Neural Gas Networks for Selective 3D Scanning”, Proc. IEEE Int. Workshop on Robotic and

Sensors Environments, pp. 108-113, Ottawa, Canada, Oct. 2008.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Vision Sensing Based on Neural Gas Network”, Proc. IEEE Int. Conf. Instrumentation and

Measurement Technology, pp. 478-483, Vancouver, Canada, May 2008 (Best Student Paper Award).

This work presents a general-purpose scheme for measuring, constructing and

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective

Acquisition of Depth Measurements: an Experimental Evaluation", Int. Conf.

Advanced Concepts for Intelligent Vision Systems, Poznan, Poland, J. Blanc-Talon et al. (Eds.) LNCS 8192, pp. 389-401, 2013.

•

P. Payeur, P. Curtis, A.-M. Cretu, "Computational Methods for Selective Acquisition of Depth Measurements in Machine Perception", IEEE Int. Conf.

Systems, Man, and Cybernetics, Manchester, UK, pp.876-881, 2013.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Range Data Acquisition Driven by Neural Gas Networks”, IEEE Trans. Instrumentation and

Measurement, vol. 58, no. 6, pp. 2634-2642, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Neural Gas and Growing Neural Gas Networks for Selective 3D Sensing: A Comparative Study”, Sensors

& Transducers, vol. 5, pp. 119-134, 2009.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Tactile Data Acquisition on 3D Deformable Objects for Virtualized Reality Applications”, Proc.

IEEE Int. Workshop Computational Intelligence in Virtual Environments, pp.14-19, Nashville, TN, USA, Apr. 2009

•

A.-M. Cretu, E.M. Petriu, and P. Payeur, “Growing Neural Gas Networks for Selective 3D Scanning”, Proc. IEEE Int. Workshop on Robotic and

Sensors Environments, pp. 108-113, Ottawa, Canada, Oct. 2008.

•

A.-M. Cretu, P. Payeur, and E.M. Petriu, “Selective Vision Sensing Based on Neural Gas Network”, Proc. IEEE Int. Conf. Instrumentation and

Measurement Technology, pp. 478-483, Vancouver, Canada, May 2008 (Best Student Paper Award).

This work presents a general-purpose scheme for measuring, constructing and representing geometric and elastic behavior of deformable objects without a priori

representing geometric and elastic behavior of deformable objects without a priori  knowledge on the shape and the material that the objects under study are made of.

knowledge on the shape and the material that the objects under study are made of. The proposed solution is based on an advantageous combination of neural network

architectures and an original force-deformation measurement procedure. An

The proposed solution is based on an advantageous combination of neural network

architectures and an original force-deformation measurement procedure. An innovative non-uniform selective data acquisition algorithm based on self-organizing

neural architectures (namely neural gas and growing neural gas) is developed to

innovative non-uniform selective data acquisition algorithm based on self-organizing

neural architectures (namely neural gas and growing neural gas) is developed to selectively and iteratively identify regions of interest and guide the acquisition of data

only on those points that are relevant for both the geometric model and the mapping

of the elastic behavior, starting from a sparse point-cloud of an object. Multi-resolution

object models are obtained using the initial sparse model or the (growing or) neural

gas map if a more compressed model is desired, and augmenting it with the higher

resolution measurements selectively collected over the regions of interest. A feed-

selectively and iteratively identify regions of interest and guide the acquisition of data

only on those points that are relevant for both the geometric model and the mapping

of the elastic behavior, starting from a sparse point-cloud of an object. Multi-resolution

object models are obtained using the initial sparse model or the (growing or) neural

gas map if a more compressed model is desired, and augmenting it with the higher

resolution measurements selectively collected over the regions of interest. A feed- forward neural network is then employed to capture the complex relationship between

an applied force, its magnitude, its angle of application and its point of interaction, the

object pose and the deformation stage of the object on one side, and the object surface

deformation for each region with similar geometric and elastic behavior on the other side. The proposed framework works directly from raw range data and

obtains compact point-based models. It can deal with different types of materials, distinguishes between the different stages of deformation of an object

and models homogeneous and non-homogeneous objects as well. It also offers the desired degree of control to the user.

Support:

forward neural network is then employed to capture the complex relationship between

an applied force, its magnitude, its angle of application and its point of interaction, the

object pose and the deformation stage of the object on one side, and the object surface

deformation for each region with similar geometric and elastic behavior on the other side. The proposed framework works directly from raw range data and

obtains compact point-based models. It can deal with different types of materials, distinguishes between the different stages of deformation of an object

and models homogeneous and non-homogeneous objects as well. It also offers the desired degree of control to the user.

Support: Publication:

Publication:  •

A.M. Cretu, “Experimental Data Acquisition and Modeling of 3D Objects using Neural Networks”, Ph.D. Thesis, University of Ottawa, 2009.

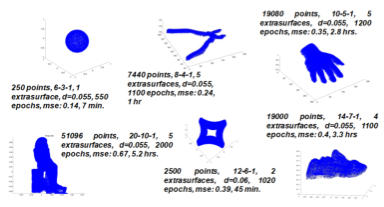

This project presents a critical comparison between three neural architectures for 3D

object representation in terms of purpose, computational cost, complexity,

•

A.M. Cretu, “Experimental Data Acquisition and Modeling of 3D Objects using Neural Networks”, Ph.D. Thesis, University of Ottawa, 2009.

This project presents a critical comparison between three neural architectures for 3D

object representation in terms of purpose, computational cost, complexity, conformance and convenience, ease of manipulation and potential uses in the

conformance and convenience, ease of manipulation and potential uses in the context of virtualized reality. Starting from a point cloud that embeds the shape of

context of virtualized reality. Starting from a point cloud that embeds the shape of the object to be modeled, a volumetric representation is obtained using a

the object to be modeled, a volumetric representation is obtained using a multilayered feed-forward neural network or a surface representation using either

multilayered feed-forward neural network or a surface representation using either the self-organizing map or the neural gas network. The representation provided by

the self-organizing map or the neural gas network. The representation provided by the neural networks is simple, compact and accurate. The models can be easily

the neural networks is simple, compact and accurate. The models can be easily transformed in size, position (affine transformations) and shape (deformation). Some

potential uses of the presented architectures in the context of virtualized reality are

transformed in size, position (affine transformations) and shape (deformation). Some

potential uses of the presented architectures in the context of virtualized reality are for the modeling of set operations and object morphing, for the detection of objects

collision and for object recognition, object motion estimation and segmentation.

Publications:

for the modeling of set operations and object morphing, for the detection of objects

collision and for object recognition, object motion estimation and segmentation.

Publications:  •

A.-M. Cretu, “Neural Network Modeling of 3D Objects for Virtualized Reality

Applications”, M.A.Sc. Thesis, University of Ottawa, 2003.

•

A.-M. Cretu and E.M. Petriu, “Neural Network-Based Adaptive Sampling of 3D

Object Surface Elastic Properties”, IEEE Trans. Instrumentation and

Measurement,

vol. 55, no. 2, pp. 483-492, 2006.

•

A.-M. Cretu, E.M. Petriu, and G.G. Patry, “Neural-Network Based Models of 3D

Objects for Virtualized Reality: A Comparative Study”, IEEE Trans.

Instrumentation

and Measurement, vol. 55, no. 1, pp. 99-111, 2006.

•

A.-M. Cretu, E.M. Petriu, and G.G. Patry, “A Comparison of Neural Network

Architectures for the Geometric Modeling of 3D Objects”, Proc. Conf.Computational Intelligence for Measurement Systems and Applications, pp.

155-160, Boston, USA, Jul. 2004.

•

A.-M. Cretu, E.M. Petriu, and G.G.Patry, “Neural Network Architecture for 3D Object Representation”, Proc. IEEE Int. Workshop on Haptic, Audio

and Visual Environments and Their Applications, pp. 31-36, Ottawa, Canada, Sep. 2003.

•

A.-M. Cretu, “Neural Network Modeling of 3D Objects for Virtualized Reality

Applications”, M.A.Sc. Thesis, University of Ottawa, 2003.

•

A.-M. Cretu and E.M. Petriu, “Neural Network-Based Adaptive Sampling of 3D

Object Surface Elastic Properties”, IEEE Trans. Instrumentation and

Measurement,

vol. 55, no. 2, pp. 483-492, 2006.

•

A.-M. Cretu, E.M. Petriu, and G.G. Patry, “Neural-Network Based Models of 3D

Objects for Virtualized Reality: A Comparative Study”, IEEE Trans.

Instrumentation

and Measurement, vol. 55, no. 1, pp. 99-111, 2006.

•

A.-M. Cretu, E.M. Petriu, and G.G. Patry, “A Comparison of Neural Network

Architectures for the Geometric Modeling of 3D Objects”, Proc. Conf.Computational Intelligence for Measurement Systems and Applications, pp.

155-160, Boston, USA, Jul. 2004.

•

A.-M. Cretu, E.M. Petriu, and G.G.Patry, “Neural Network Architecture for 3D Object Representation”, Proc. IEEE Int. Workshop on Haptic, Audio

and Visual Environments and Their Applications, pp. 31-36, Ottawa, Canada, Sep. 2003.

Research

© Ana-Maria Cretu 2019

Ana-Maria Cretu

Sensor systems for multisensory data acquisition

Multimodal biologically-inspired tactile sensing

Perceptually-improved multi-resolution 3D object modeling

Deformable object tracking and modeling for robotic hand manipulation

Deformable object tracking for material characterization

Selective range data acquisition

Experimental data acquisition and modeling of 3D deformable objects using neural networks

Neural network modeling of 3D objects for virtualized reality applications

Virtual environments for phobia treatment

Motion tracking and imitation for natural human-machine interaction